A short history of AI

How AI changed over time and what remained the same since the 1950s.

05/2024

Welcome to a short history lesson on artificial intelligence (AI), a technology area that has fascinated humans since the 1950s. The idea of crafting machines capable of thinking and helping humans is not a modern notion, but one that has evolved significantly over decades.

In this article, we will highlight a few major milestones and explain what led to the AI break-through that brought AI onto seemingly every business roadmap.

Birth of AI (1950s)

The birth of AI can be traced back to two pivotal moments in the mid-20th century. In 1950, Alan Turing, a pioneering British mathematician, published a paper titled “Computer Machinery and Intelligence“. This groundbreaking work introduced what is now known as the Turing Test — a method to determine if a machine can exhibit intelligent behavior indistinguishable from a human, which he referred to as “The Imitation Game.”

Just a few years later, in 1955, John McCarthy, another key figure in the history of AI, organized a workshop at Dartmouth College. This event is notable for being the first instance where the term “artificial intelligence” was used, establishing its place in common language.

Early AI progress (late 1950s - 1970s)

Between 1957 and 1979, artificial intelligence saw significant advancements, starting with John McCarthy’s creation of LISP in 1958, the first programming language designed specifically for AI research. Around this time, the term “machine learning” was introduced the first time to describe algorithms that enabled machines to outperform their programmers in chess.

The development of AI continued with the creation of the first “expert system”, which emulated the decision-making of human experts. During that time, Joseph Weizenbaum, a German American computer scientist, created the first chatbot that simulated conversation using natural language processing.

Additionally, in the late 1960s Alexey Ivakhnenko, a Soviet and Ukrainian mathematician, proposed the first foundational methods that paved the way for what is now known as deep learning. These early years brought many discoveries and concepts that showed to be crucial for how today’s AI models work.

The first AI Boom (1980s)

During the first AI boom, some significant advancements caught the world’s attention.

To mention only a few:

- Amongst others, the first expert system was commercialized, which simplified the process of ordering computer systems.

- The first autonomous drawing program was created.

- A team at Bundeswehr University of Munich created and demonstrated the first driverless car. This car could travel up to 55 mph on roads without any other cars or people, showing just how far AI technology had already come.

AI winter (late 1980s - early 1990s)

Despite the first AI products that reached commercial markets and promising research projects, the enthusiasm or hype for AI technologies significantly cooled off – the often-called “AI winter”. The setbacks in many early AI projects led to skepticism about the practical value of AI research. As funding dried up, so did the momentum for new discoveries.

Universities and private laboratories that once buzzed with ideas and experimentation faced budget cuts, leading to a decline in the development of new AI tools and applications. This period was marked by a general disappointment as the promises of AI had not been realized, and the initial excitement turned into disillusionment.

Specialized AI solutions (late 1990s - 2010s)

Following the AI winter, a new phase began to emerge, focused on specialized AI solutions tailored to specific tasks. During the following years, highly specific AI systems became more integrated into innovative tools and services. In this stage, the development of AI technologies transitioned into creating applications that could perform specific functions exceptionally well.

In the early days, IBM’s deep blue for example beat the then world champion in chess, Gary Kasparov, becoming the first computer program to beat a professional chess champion. In the early 2000s tech companies like Facebook started to integrate AI into their advertising algorithms, optimizing the distribution and performance of their ad services. In 2011 Apple released their first virtual assistant – Siri.

Then, in 2010 and following, AI saw a new uprise, due to new availabilities to larger amounts of data and new computing power:

Firstly, the availability of large amounts of data transformed how AI systems could learn and adapt. Unlike the past, where data had to be meticulously collected and prepared, massive datasets were then readily accessible online. This shift allowed developers to train AI systems more comprehensively and accurately, enabling them to recognize patterns and make decisions with greater precision.

Secondly, the technological leap in processing power, particularly through the use of advanced graphics processing units (GPUs), revolutionized AI computation. These processors, originally designed for rendering graphics in video games, proved to be incredibly effective for performing the complex mathematical calculations required for training AI systems. This meant that tasks which once took weeks could now be completed in just hours or days, significantly speeding up the development cycle of AI technologies.

As a result, AI became more cost-effective and accessible, paving the way for more AI progress.

The AI break-through: "Attention is all you need" & new "transformer" architectures (2017 - 2022)

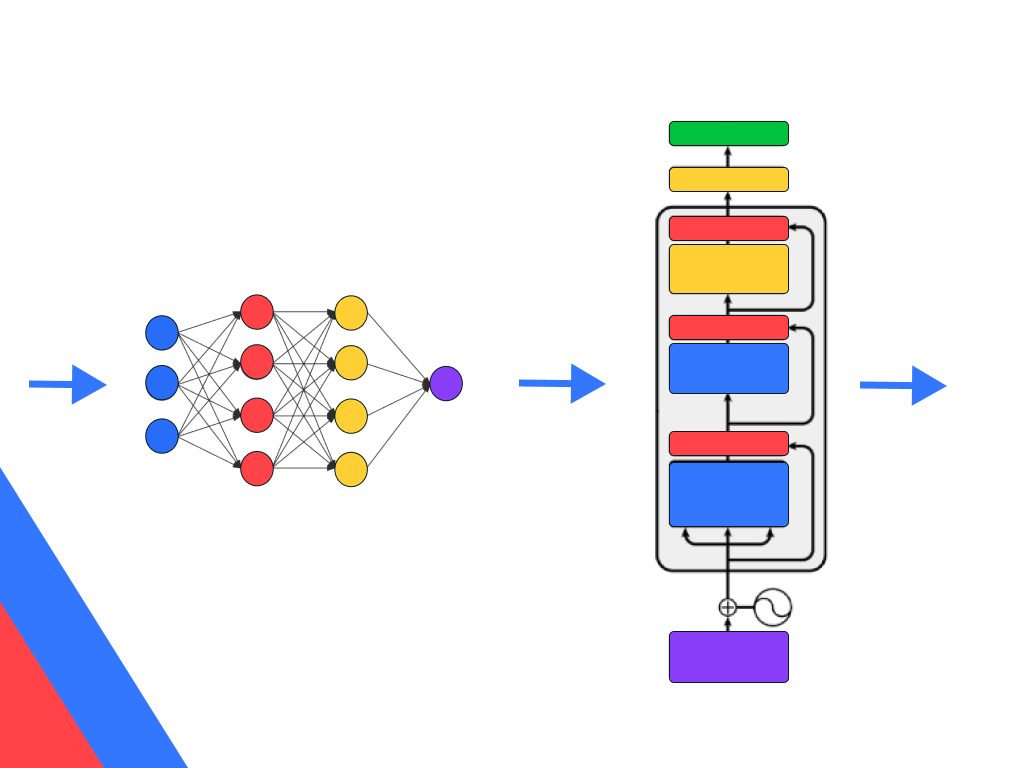

With larger amounts of data available, AI models where tasked to solve problems that required to “understand” or process more and more data, and also more sequential data, that needed to be interpreted within its context: In a text or document for example, the order of words are of high importance for the overall meaning – a technicality with which AI models at that time struggled with.

Traditional neural networks had a hard time remembering earlier information as they processed new data, which made it difficult for them to handle complex contexts. Recurrent Neural Networks (RNNs) and their advanced version, Long Short-Term Memory networks (LSTMs), were developed to address this issue. They improved the ability of models to remember information from earlier in the sequence, which was crucial for tasks like translation or speech recognition.

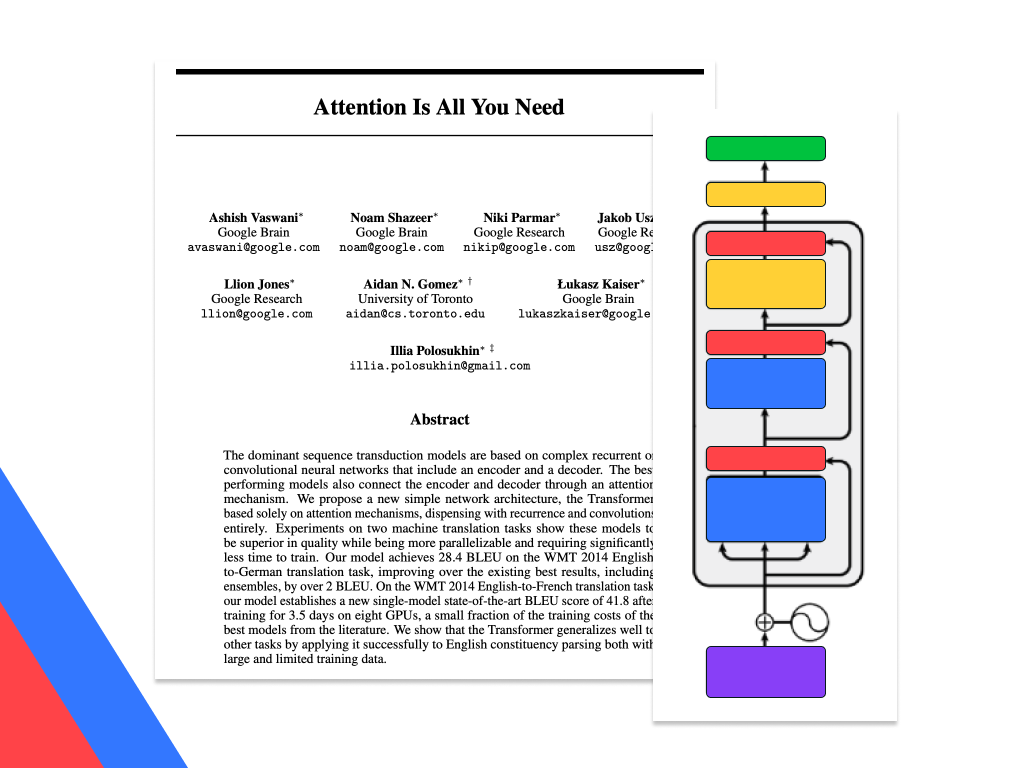

However, the significant basis for the then in 2022 following AI break-through came with the introduction of the Transformer model, as detailed in the 2017 paper “Attention is All You Need” by Google engineers. This new architecture innovated how machines handle sequences by using what’s known as an “attention mechanism.” Unlike RNNs and LSTMs that process data sequentially, Transformers look at entire sequences at once. This allows the model to weigh the importance of every part of the input data simultaneously, making it far more effective at picking up on relationships and dependencies across long texts.

This break-through not only made AI models faster and more scalable but also significantly improved their performance on complex tasks involving large amounts of data. It was especially the latter aspect that opened the doors to today’s extremely powerful AI foundation models: the fact that models based on a transformer architecture could now be scaled in its size and consequently grow its capabilities proportionally (or even over-proportional).

Companies like OpenAI recognized this as the first and created an environment that allowed themselves to financially and technically build very large AI models – they collected large amounts of investment sums, hired many great AI researchers and bought a lot of computing resources. The release of ChatGPT on November 30, 2022 can therefore be considered to be the break-through moment for AI.

Within only 5 days Chat GPT reached 1 million users and within 2 months it had reached 100 million – a pace of adoption that was unseen before. What followed was an equally unprecedented success story for AI.

Today's state of the art AI

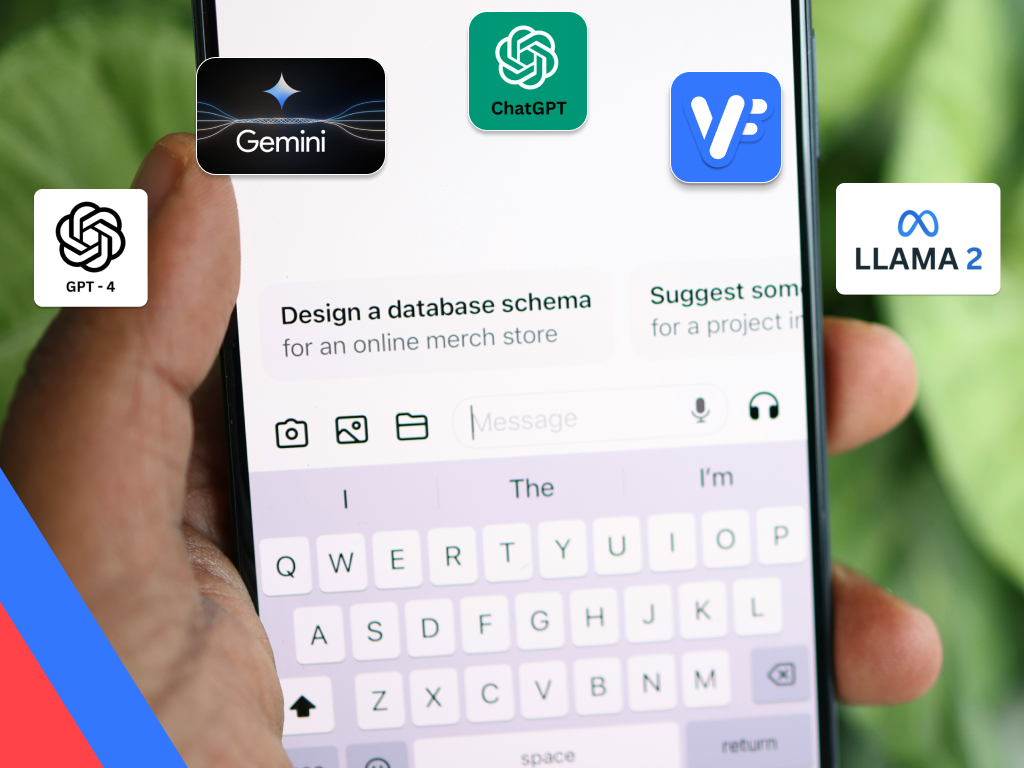

After the subtle break-through from the technical perspective (with the transformer architectures) and the rapid medial break-through through ChatGPT, big tech companies and well-funded startups started a race to develop the next bests large AI Models that were able to understand and respond to human language.

These models, often called Large Language Models or LLMs, can interact with users through simple, conversational prompts. This means that people can ask questions or give commands in everyday language, and the AI can provide answers or perform tasks accordingly. This shift represents a significant change from the past, where AI solutions were typically developed by technical departments to tackle specific problems.

By now, Large Language Models offer an improved way of interaction, and are capable of potentially solving multiple use cases – they now generalize better. These new AI models – LLMs – are viewed as an entirely new technological platform, comparable to groundbreaking technology shifts like the internet, mobile technology, and cloud computing.

The emergence of LLMs has created a new category of technology that requires on the one hand new tools and skills and on the other hand offers unprecedented capabilities like generating and understanding text, images, audio, and video, or planning and reasoning.

The impact of LLMs is profound, challenging existing jobs and disrupting industries (see this extensive McKinsey study for more information). Some jobs will disappear while others will adapt and evolve due to this technological shift. AI has reached its inception point, which means that there is no turning back from the transformative effects it is set to have on society.

While it is not a technology shift without its dangers and problems, it’s upside potential is at least equally large, and probably much larger. For businesses this means that it is essential to get started integrating this technology into their daily operations to learn how to benefit from it – with ValueFlow we offer an easy entry into the usage of these powerful models.

May 2024 | ValueFlow team

Reach out with comments or suggestions:

comment@valueflow.ai